Share this article on:

Powered by MOMENTUMMEDIA

Breaking news and updates daily.

AI-generated and realistic images depicting the conflict in Israel-Gaza are being sold on Adobe’s stock image subscription service and used by media publications online.

The company’s stock subscription services allow users to upload and sell images, including those generated by AI, as part of the company’s adoption of the technology, with its Firefly generative artificial intelligence (AI) tools.

Those who submit AI-generated images to the Adobe stock image service are required to disclose that they are AI-generated, and images are accompanied by a “generated with AI” marking on the site,

Some of these images look very real, meaning without these markings, it wouldn’t be hard to mistake them for actual photos.

This exact concern is now becoming a reality, with AI images depicting the Israel-Hamas war being used by a number of publications without mentioning that they were AI-generated.

These images portray scenes of explosions in civilian populated areas or women and children fleeing from combat areas and flames, looking photorealistic while not being real.

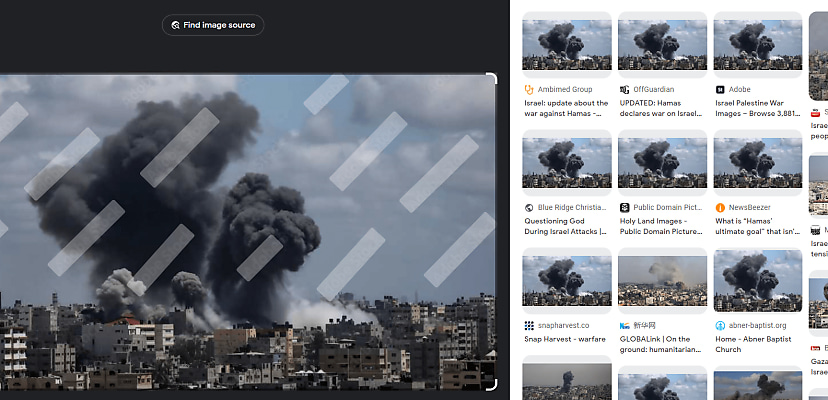

For example, reverse image searching an image of clouds of black smoke rising from buildings titled “conflict between israel and palestine generative ai” by Scheidle-Design shows that it has been used by a large number of news publications, as depicted in the image above.

The concern is that these publications are, knowingly or otherwise, spreading misinformation during a conflict where disinformation and propaganda are rife, and it is already difficult to discern what is real or fake.

Associate editor for the Atlantic Council’s Digital Forensic Research Lab, Layla Mashkoor, said that generative AI has already played a large role in influencing supporters of each side.

“Examples include an AI-generated billboard in Tel Aviv championing Israel Defense Forces, an Israeli account sharing fake images of people cheering for the IDF, an Israeli influencer using AI to generate condemnations of Hamas, and AI images portraying victims of Israel’s bombardment of Gaza,” she said.

Despite this, Mashkoor said that these images are largely just used to “drum up support, which is not among the most malicious ways to utilise AI right now”, and that their efficacy is limited due to the sheer depth of misinformation circulating online.

“The information space is already being flooded with real and authentic images and footage, and that in itself is flooding the social media platforms,” she said.

Regardless, these images evoke an emotional response from the viewer and, whether they intend to or not, have the potential to manipulate the opinions of those who see them, particularly in the context of an accompanying article.

Misinformation and weaponised disinformation are major concerns with the adoption of AI. According to a Forbes Advisor survey, 76 per cent of consumers are concerned that AI tools like Google Bard, ChatGPT and Bing Chat could generate misinformation.

Additionally, the chief executive of ChatGPT parent company OpenAI, Sam Altman, said his greatest concern with the development of AI was its ability to spread misinformation.

“My areas of greatest concern [are] the more general abilities for these models to manipulate, to persuade, to provide sort of one-on-one interactive disinformation,” he told Congress back in May.

“Given that we’re going to face an election next year, and these models are getting better, I think this is a significant area of concern, [and] I think there are a lot of policies that companies can voluntarily adopt.”

Be the first to hear the latest developments in the cyber industry.