Share this article on:

Powered by MOMENTUMMEDIA

Breaking news and updates daily.

AMD continues to take the fight to NVIDIA, with the chip giant announcing a new range of artificial intelligence (AI) chipsets, including a new, industry-leading, generative AI accelerator.

While AMD is not the first to launch AI chips, with Intel having launched its own offerings and the company’s rival NVIDIA accounting for more than 70 per cent of AI chip sales, AMD is calling its latest offering the most powerful on the planet.

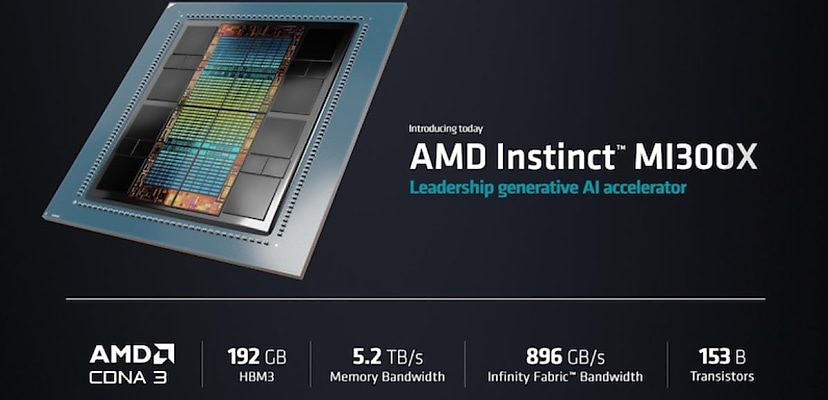

The new chip, the AMD Instinct MI300X, is the successor to the MI200 and the third iteration of the AI accelerator since the company first launched AI chips in 2020, with the MI100, which was the first GPU architecture that was purpose-built for FP64 and FP32 HPC.

Built on AMD’s CDNA3 architecture, the MI300X has 192 gigabytes of memory capacity and is capable of 5.3 terabytes per second of peak theoretical memory bandwidth, which is 1.5 times and 1.7 times the capability of the previous flagship AI chipset, the MI250X, respectively.

Additionally, it delivers roughly 1.9x times the performance-per-watt for FP32 HPC AI workloads and is 30 times more energy-efficient than the MI250X.

During her keynote speech at an Advancing AI event in California, AMD president, chairman and chief executive Dr Lisa Su said the new chipset is “the highest performance accelerator in the world for generative AI.

"AI is the most transformational technology in 50 years. Maybe the only thing close is the introduction of the internet, but with AI, the adoption has been much, much quicker, and we’re only at the beginning of the AI era,” she added.

“ChatGPT has sparked a revolution that transformed the technology landscape. AI hasn’t simply progressed but exploded. The year has shown us AI isn’t simply a cool new thing, but the future of IT.”

AMD said the closest accelerator on the market was NVIDIA’s H100, which, while boasting similar bandwidth and network performance, AMD’s new chip has 2.4 times the memory and 1.3 times the computing power.

The new chip has been widely accepted by titans of the industry, with Microsoft Azure and Oracle Cloud Infrastructure already dedicated to its adoption.

OpenAI researcher Philippe Tillet also said the company would be supporting AMD’s new technology.

“OpenAI is working with AMD in support of an open ecosystem. We plan to support AMD’s GPUs, including MI300, in the standard Triton distribution starting with the upcoming 3.0 release.”

AI has already been adopted for use in a number of critical industries, such as security, healthcare, energy and more.

However, the technology requires heavy-duty infrastructure and a lot of power to run. These AI tools run billions of parameters and draw information from massive data sets, all in seconds.

AMD attempted to calculate the infrastructure growth that AI would spark, originally finding it to be 50 per cent compound annual growth rate (CAGR), which would mean a US$30 billion investment in 2023 would result in US$150 billion in 2027.

However, further revision estimated it was closer to 70 per cent CAGR, meaning US$45 billion in 2023 would equate to US$400 billion in 2027.

Be the first to hear the latest developments in the cyber industry.