Share this article on:

Powered by MOMENTUMMEDIA

Breaking news and updates daily.

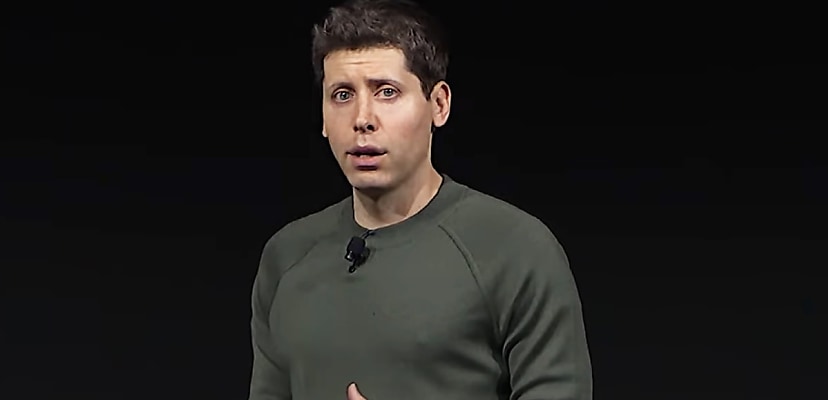

OpenAI CEO Sam Altman has asked those excited by his problem to calm down and lower expectations surrounding artificial general intelligence (AGI).

For context, AGI refers to AI machines that can learn and complete tasks at a human level, mimicking human brain activity.

Earlier this month, Altman said that OpenAI was shifting beyond AGI to superintelligence, saying that he was certain of the development of AGI.

“We are beginning to turn our aim beyond [AGI], to superintelligence in the true sense of the word,” said Altman.

“We love our current products, but we are here for the glorious future.”

Additionally, as pointed out in a post by writer Gwern Branwen, OpenAI fans have been incredibly hyped up by OpenAI and AGI as the company continues to improve its ChatGPT models.

“Several OpenAI staff have been telling friends they are both jazzed & spooked by recent progress. Watching the improvement from the original 4o model to o3 (and wherever it is now!) may be why. It’s like watching the AlphaGo Elo curves: it just keeps going up... and up... and up…,” said Branwen.

Now, Altman has made a post of his own to stunt the hype, asking fans of OpenAI to lower their expectations.

“Twitter hype is out of control again,” he said in a post to X.

“We are not gonna deploy AGI next month, nor have we built it.

“We have some very cool stuff for you but pls chill and cut your expectations 100x!”

twitter hype is out of control again.

— Sam Altman (@sama) January 20, 2025

we are not gonna deploy AGI next month, nor have we built it.

we have some very cool stuff for you but pls chill and cut your expectations 100x!

In 2023, a focus on superintelligence and superhuman AI found Altman in hot water when he was fired by the OpenAI board after they had heard the company was working on potentially dangerous artificial intelligence.

The AI in question is called Q* (pronounced Q Star), and it’s OpenAI’s project to search for what is known as artificial general intelligence (AGI), or superintelligence, which the company said is AI that is smarter than human beings.

Q* had reportedly been making serious progress, with the model performing tasks that could revolutionise AI.

Current AI models, like OpenAI’s ChatGPT 4, are fantastic at writing based on predictive behaviour, but as a result, answers do vary and are not always correct.

Researchers believe that having an AI tool capable of solving and properly understanding mathematic problems where there is only one answer is a major breakthrough in the development of superintelligence.

This is exactly what Q* began to do. While only solving equations at a school level and being extremely resource-heavy, researchers believe this to be big.

It’s worth noting that this is not like a calculator that can solve certain equations as you enter them in. ChatGPT can already do that, thanks to the massive library of data it has access to. Superintelligence like Q* is able to learn and properly understand the process.

The reason the OpenAI board was displeased with Altman is that news of these developments had been kept quiet.

“A little over a year ago, on one particular Friday, the main thing that had gone wrong that day was that I got fired by surprise on a video call, and then right after we hung up the board published a blog post about it. I was in a hotel room in Las Vegas. It felt, to a degree that is almost impossible to explain, like a dream gone wrong,” Altman said in a blog post earlier this month.

“The whole event was, in my opinion, a big failure of governance by well-meaning people, myself included. Looking back, I certainly wish I had done things differently, and I’d like to believe I’m a better, more thoughtful leader today than I was a year ago.

“I also learned the importance of a board with diverse viewpoints and broad experience in managing a complex set of challenges. Good governance requires a lot of trust and credibility. I appreciate the way so many people worked together to build a stronger system of governance for OpenAI that enables us to pursue our mission of ensuring that AGI benefits all of humanity.”

Be the first to hear the latest developments in the cyber industry.